In a significant move towards responsible artificial intelligence development, Apple has agreed to adhere to a set of voluntary AI safeguards established by President Joe Biden’s administration. This commitment aligns Apple with other major tech companies, including OpenAI, Amazon, Alphabet, Meta, and Microsoft, all working to ensure that AI technologies are developed with safety and accountability.

The Importance of AI Safeguards

Initially announced by the White House as part of an Executive Order last year, the safeguards are designed to guide the development of AI systems. They emphasize the importance of testing for discriminatory tendencies, security vulnerabilities, and potential national security risks.

The principles outlined in these guidelines require companies to share the results of their AI system transparently with governments, civil society, and academia. This transparency is crucial for fostering an environment of accountability and peer review, ultimately promoting the creation of safer and more reliable AI technologies.

Apple's Commitment to Responsible AI

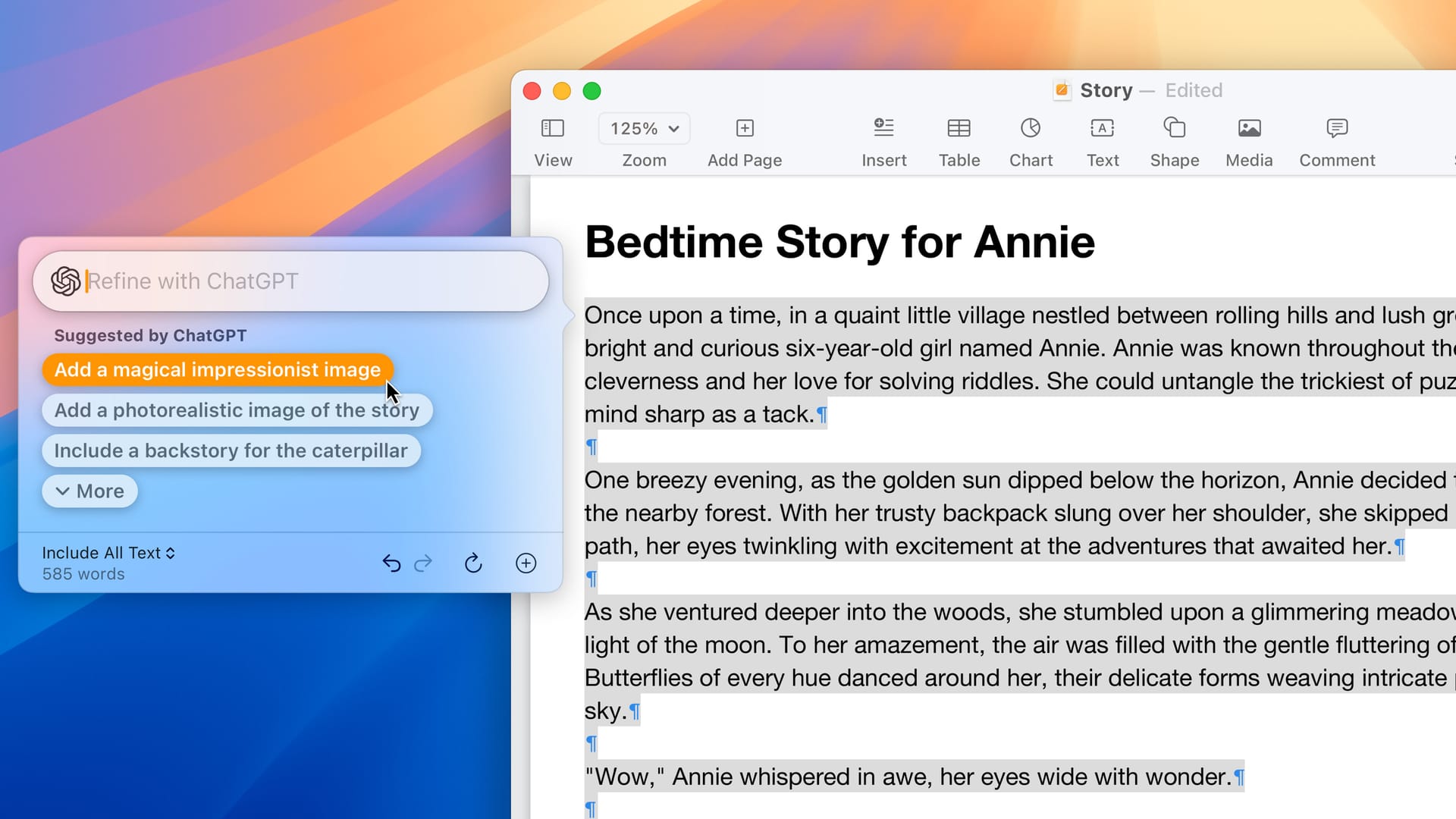

Apple's participation in these safeguards comes as the company prepares to introduce its own AI system, dubbed "Apple Intelligence." This new system will be integrated with OpenAI’s ChatGPT and is expected to enhance Siri's capabilities on iPhones. The iPhone 15 Pro, Pro Max, and all upcoming iPhone 16 models will support this integration. At the same time, Mac and iPad devices equipped with M-series Apple silicon chips will also benefit from these advancements.

Although Apple Intelligence is not yet available in beta for the upcoming iOS 18, iPadOS 18, or macOS Sequoia, some features are anticipated to roll out in beta soon, with a public release expected by the end of the year. Further enhancements, including a significant overhaul of Siri, are projected for the spring of 2025.

(Side note: I can't wait to get my hands on it!)

A Step Towards Self-Regulation

While the guidelines set forth by the Biden administration are not legally binding, they represent a collective effort by the tech industry to self-regulate and mitigate the potential risks associated with AI technologies. The executive order also mandates that AI systems undergo testing before being eligible for federal procurement, which underscores the administration's commitment to ensuring the safety and reliability of AI systems.

The guidelines include several key measures, such as:

- Safety Testing: AI developers must share their safety test results with the U.S. government, particularly for models that pose significant risks to national security or public safety.

- Establishing Standards: Various U.S. agencies will test AI systems to ensure they are safe, secure, and trustworthy.

- Fraud Protection: The Department of Commerce will develop guidance for content authentication and watermarking to label AI-generated content clearly.

- Cybersecurity Programs: There will be efforts to harness AI's capabilities to enhance the security of software and networks.

Takeaway

Apple's commitment to these AI safeguards is a crucial step in promoting responsible AI development. As the company prepares to integrate advanced AI features into its products, it is also taking measures to develop these technologies with a strong emphasis on safety, transparency, and accountability.

As we look ahead, it will be interesting to see how these commitments shape the future of AI technology and its integration into our daily lives. With Apple Intelligence on the horizon, the company is poised to take charge of creating a safer and more reliable AI ecosystem.