In an interesting development in the AI landscape, Apple has published that the artificial intelligence models underpinning Apple Intelligence were pre-trained on processors designed by Google. This move highlights the increasing trend among Big Tech companies to explore alternatives to Nvidia for training state-of-the-art AI systems.

Foundation Language Models for Apple Intelligence

Apple's recently published paper, Apple Intelligence Foundation Language Models, provides comprehensive insights into the development and training of these models. The paper introduces two main AI models: a ∼3 billion parameter model optimized for on-device usage and a larger server-based language model designed for Private Cloud Compute.

These models are designed to handle a variety of tasks efficiently, accurately, and responsibly, including writing assistance, notification prioritization, summarization, and more. A key focus of the development process has been adherence to Responsible AI principles, ensuring that the models are developed with user privacy, fairness, and safety in mind—an Apple staple, carrying their privacy focus into AI.

Training Infrastructure and Process

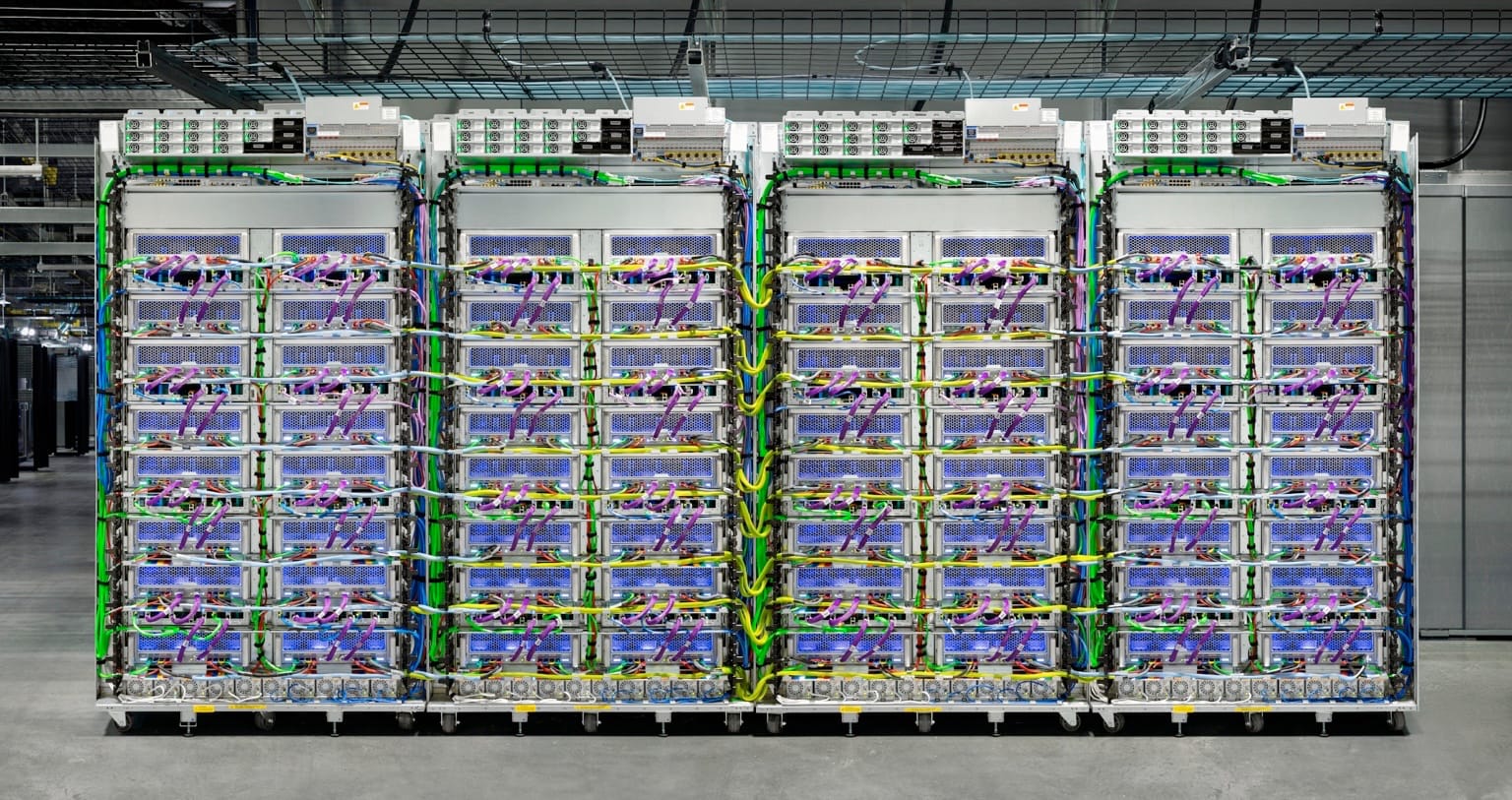

Apple's AI models, which power the Apple Intelligence features, were trained using powerful processors designed by Google, known as Cloud TPUs. These TPUs are like supercharged brains for computers, making it possible to train very large and complex AI models efficiently.

Training on Google’s TPUs

The bigger AI model used by Apple (AFM-server) was trained using a large number of these TPUs—8192 to be exact. These TPUs were organized in groups, working together seamlessly to handle the vast amount of data and computations needed for training. This setup allowed Apple to utilize about 52% of the TPUs' capacity effectively, which is quite efficient.

The smaller AI model (AFM-on-device), which is designed to run directly on devices like iPhones and iPads, was trained using 2048 of these advanced TPUs. This setup ensured that the model could be trained quickly and effectively, making it suitable for use on personal devices.

Data and Pre-Training Stages

Apple taught these AI models using a diverse set of high-quality data. This data came from various sources, including licensed publishers, public datasets, and information available on the web. Apple made sure to filter out any inappropriate content or personal information to ensure the safety and privacy of the data used.

The training process for these models involved three main stages:

- Core Pre-Training: This initial stage involved the heavy lifting of training the AI from scratch for the server model and refining a larger model for the on-device version.

- Continued Pre-Training: In this stage, the models were trained with longer sequences of data, including specialized data like math and code, as well as additional licensed content.

- Context Lengthening: The final stage involved training the models with even longer sequences of data, using synthetic questions and answers to improve their ability to understand and generate long pieces of text.

If you want a TL;DR for all of that, Apple used cutting-edge technology and a careful, staged process to train its AI models, ensuring they are powerful, efficient, and safe for users.

Focus on Responsible AI

Apple's commitment to Responsible AI is evident throughout the model development process. The company adheres to principles designed to empower users, represent them authentically, design with care, and protect privacy. These principles guide the creation of AI tools and models, ensuring they are developed responsibly and ethically.

The Final CPU Cycle

Apple's use of Google's custom chips for training its AI models underscores the dynamic and collaborative nature of the tech industry. By leveraging these powerful training resources, Apple aims to deliver advanced AI capabilities through its Apple Intelligence system, enhancing user experiences across its ecosystem of devices.

Remember this is for training the models Apple uses in Apple Intelligence, which is very different from the custom Apple silicon the models will run on.

As these AI features evolve, Apple remains committed to maintaining the highest privacy, fairness, and safety standards. Stay tuned for more updates as Apple Intelligence rolls out these exciting new capabilities.